Learning High-Risk High-Precision Motion Control

In Proceedings of ACM SIGGRAPH Conference on Motion, Interaction and Games (MIG) 2022

Video

Paper: HTML

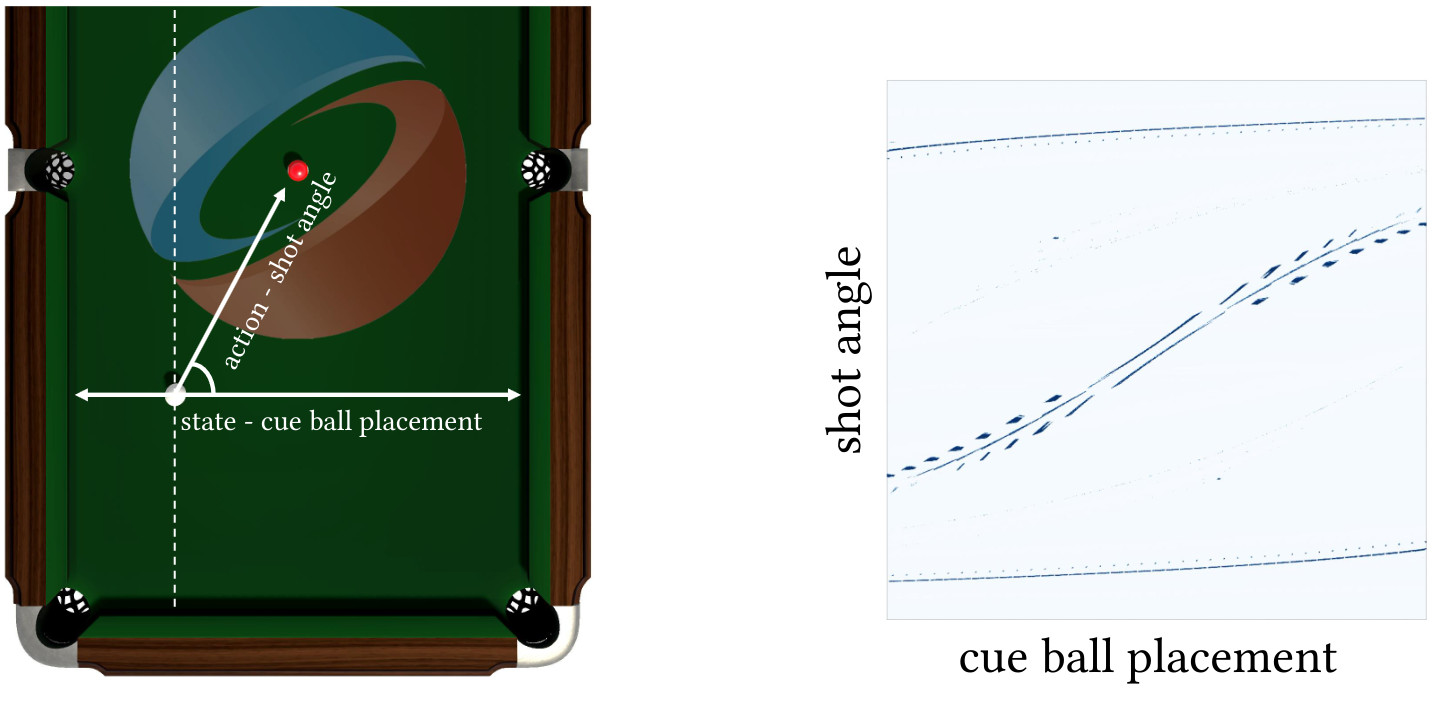

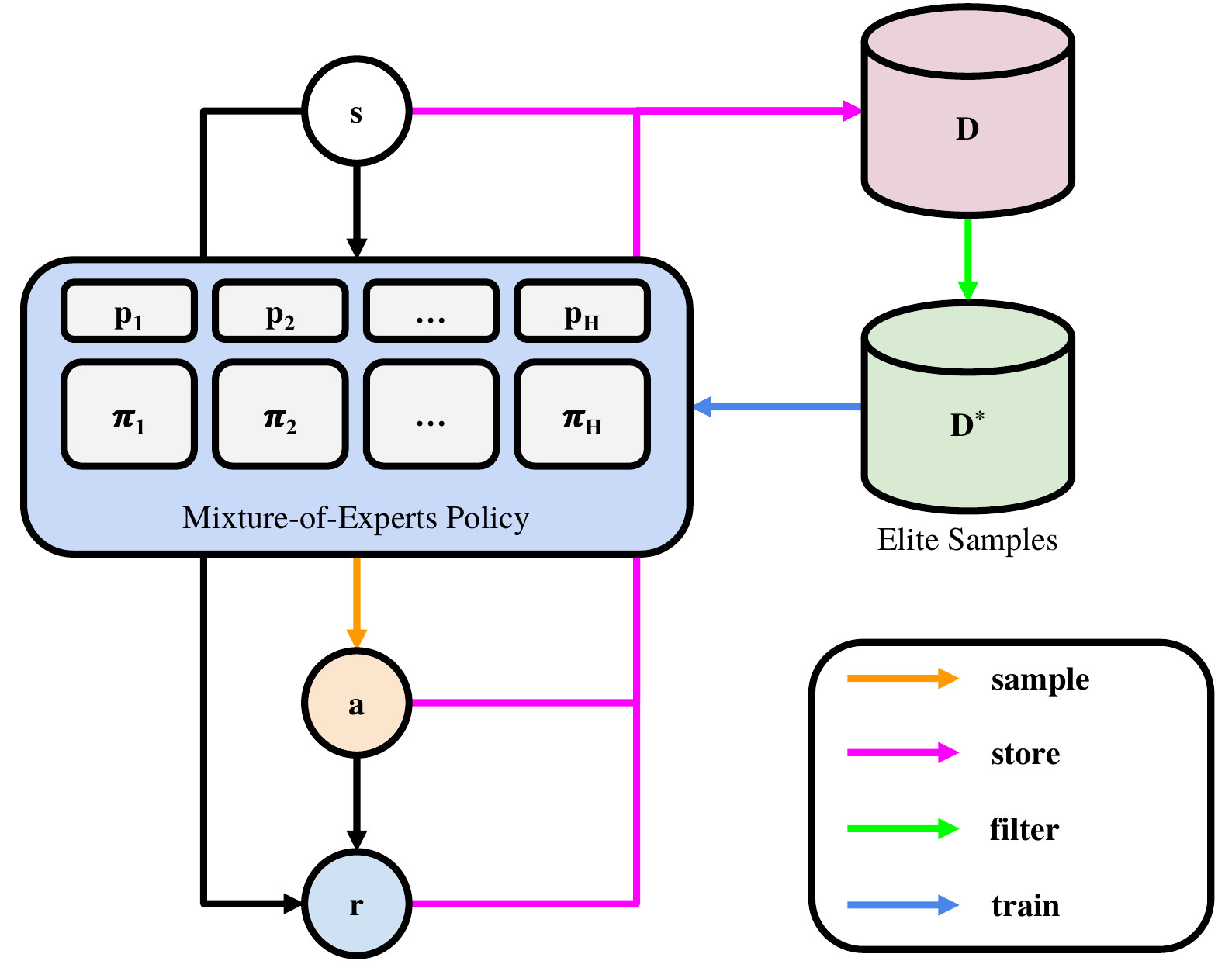

Deep reinforcement learning (DRL) algorithms for movement control are typically evaluated and benchmarked on sequential decision tasks where imprecise actions may be corrected with later actions, thus allowing high returns with noisy actions. In contrast, we focus on an under-researched class of high-risk, high-precision motion control problems where actions carry irreversible outcomes, driving sharp peaks and ridges to plague the state-action reward landscape. Using computational pool as a representative example of such problems, we propose and evaluate State-Conditioned Shooting (SCOOT), a novel DRL algorithm that builds on advantage-weighted regression (AWR) with three key modifications: 1) Performing policy optimization only using elite samples, allowing the policy to better latch on to the rare high-reward action samples; 2) Utilizing a mixture-of-experts (MoE) policy, to allow switching between reward landscape modes depending on the state; 3) Adding a distance regularization term and a learning curriculum to encourage exploring diverse strategies before adapting to the most advantageous samples. We showcase our features’ performance in learning physically-based billiard shots demonstrating high action precision and discovering multiple shot strategies for a given ball configuration.

Summary

We diagnose the difficulty of high-precision motion control by visualizing the reward landscape, which shows sparse, sharp, and multi-modal reward ridges. Capturing the sparse reward ridges requires stringent pruning of negative-advantage samples and capturing multi-modal solution modes requires the use of a mixture model policy. We devise simple modifications to an existing off-policy DRL algorithm–Advantage-Weighted Regression (AWR) to accomodate for these challenges.

Cite Us

@inproceedings{kim2022learning,

title={Learning High-Risk High-Precision Motion Control},

author={Kim, Nam Hee and Kirjonen, Markus and H{\"a}m{\"a}l{\"a}inen, Perttu},

booktitle={Proceedings of the 15th ACM SIGGRAPH Conference on Motion, Interaction and Games},

pages={1--10},

year={2022}

}